Here at Outlyer we develop a monitoring system. In order for it to be useful for our customers, we need to integrate with several systems in order to obtain data that would otherwise be hard to find. In this post, we’ll be talking about how we used a Linux kernel feature to obtain load averages for containers.

Getting metrics from Docker containers

Docker has grown in adoption in recent times. It has become somewhat of a mainstay in our industry, with several tools building upon it. Interest is growing in monitoring Docker environments, and as such we need to find a reliable way to obtain metrics from them.

In a previous post, we described how to get several metrics such as CPU usage percentage, Memory, Network and Disk usage from Cgroups. A useful metric that is missing is the load average.

What’s the load average and why is it useful?

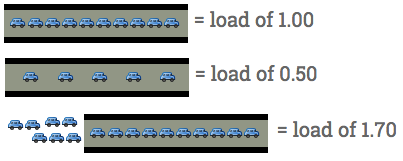

You can think of load as a measure of how “busy” your system is. Load represents the number of processes that are waiting to be executed: a load of 0 means that your system is idle, and each process that is either running or waiting to run increments the load value by 1.

The image below is a representation of load average (not load):

Source:

Scout

Source:

Scout

This is useful because CPU percentage only tells you how your cores are currently being utilised (the percentage value is taken from periodic measurements), not how many tasks your system has to run overall. It also has other problems.

For instance, you may find that one of your services is extremely slow, and on inspection you can see that CPU percentage is 100% (on a single-core machine). Using strace or some other tool, you can see that your process is not actually doing anything.

So what can the problem be? Well, your process can be waiting in order to have a chance to do something. You would check your load value in order to determine if this is the problem.

What about a multi-core system?

On a multi-core system, what the load value represents doesn’t change. What changes is that now you can effectively run X tasks in parallel, where X is the number of cores. So if you have a load of 4 and 4 cores on your system, then it is not over-subscribed because it can execute the current 4 tasks.

How can I find the current load?

On a host, you usually find the load average by running a tool such as top, uptime or reading the /proc/loadavg file. This will give you the load average for the past minute, 5 minutes and 15 minutes along with the number of running tasks.

You can see how the kernel does it here: http://elixir.freeelectrons.com/linux/v2.6.32/source/kernel/sched.c#L2981

Essentially, it takes periodic measurements of the current number of running and uninterruptible tasks, and uses exponential decay such that recent measurements weigh more on the overall value than past ones.

What about docker?

In a docker container we, unfortunately, cannot rely on the same mechanisms as we would on a host. In essence, the/proc virtual file system does not work as docker containers are basically processes running under a given namespace, and /proc is not namespace aware. We can still use a tool such as ps to find the number of running tasks, but it is expensive to run ps on a container when it is outside of it (as it involves running something such as docker exec <container_id> ps aux).

Asking the kernel

What we could do though is ask the kernel for it because as mentioned above, the docker containers can be thought of as processes running under a different namespace!

Netlink

Netlink is a socket based interface in Linux, which allows userspace applications to communicate with the kernel. The protocol works based on byte messages, which consist of a header and a payload.

There are a few “families”, which are related to network information.

Taskstats and Cgroupstats

If we search the documentation, we can find details for Taskstats and Cgroupstats.

These are interfaces that build upon the NETLINK_GENERIC family. Taskstats gives per-process statistics, and Cgroupstats gives per-cgroup statistics.

As the documentation for cgroupstats says, you can pass a cgroup path to the interface and get some data, which is the number of processes that are running or waiting for I/O.

And as we’ve seen above, this is all we need to determine our load average!

Putting it all together

Now that we know how to obtain the number of running tasks for a cgroup, it’s just a matter of getting this periodically for each docker container (which have their own cgroup hierarchy). You can use a netlink library such as gnlpy for Python, which will do the job of communicating with the kernel. In order to approximate the load average, just implement something like what can be found here.

>>> from gnlpy.cgroupstats import CgroupstatsClient

>>> c = CgroupstatsClient()

>>> c.get_cgroup_stats("/sys/fs/cgroup/cpu/docker/3b18cd378543ad1362133622c83e267d843e68aa5f93f67ef76c65f9415f311")

Cgroupstats(nr_sleeping=2, nr_running=2, nr_stopped=0, nr_uninterruptiple=0, nr_iowait=0)

>>>

Pitfalls

Netlink can only be used by root in Linux, so you’ll need to do the netlink communication under root priviledges. If you want to use netlink from inside a docker container, then you’ll need to be in the host’s network namespace so you can communicate with the kernel.