Running an online service isn’t easy. Every day you make complex decisions about how to solve problems and often there is no right or wrong answer—just different ways with different results. On the infrastructure side, you have to weigh up where everything will be hosted: on a cloud service like AWS, or in your own data centres, or a combination of the two, or any number of other options.

Monitoring choices are equally hard. There are the tools that are familiar and a known quantity, some new ones that look interesting from the outside, and then the option to buy a SaaS product.

Let’s imagine for the sake of brevity that you are looking to move into AWS from your traditional data center, and want to upgrade from your Nagios, Graphite and StatsD stack to something a bit newer. This is actually a very common scenario that we see every day.

The first decision to make is to analyse upfront whether to build or buy. To properly make that decision you’ll need to explore a bit down each path to fully understand the pros and cons. I’ll take Prometheus as one of the better options in the build camp, and obviously I’ll draw from the experience of our own product.

In some cases, the workload of changing platforms removes the option to build. There aren’t enough people or hours in the day, and if you were to build, something else would have to drop off your list. Monitoring is an easy choice as you can trade money for time savings. That often isn’t true for the other migration work which usually involves in-house knowledge.

Prometheus

Firstly, let me say that I think that Prometheus is awesome. It is used as the example here because it’s a genuine option for many companies who are moving to the cloud and starting to deploy their software in containers.

Now for a bit of background. Prometheus was designed by SREs for SREs, and was built to monitor a heavily containerised SaaS product. The requirements were to be simple to operate and to store a few weeks worth of data: enough to help whoever was working on fixing or improving the service.

For this reason it’s a single Go binary that’s easy to manage, and the deployment model is fairly decentralised. Each team or service might have their own Prometheus server. Federation can be setup between the servers, and additional copies of a server can be run in parallel to cover any HA worries.

This all sounds like it might make building your own solution a no-brainer.

Reality of building

If it sounds too good to be true, then it usually is. Unfortunately there are some realities that come with making the decision to build your own monitoring solution.

Firstly, there needs to be an acceptance that this is going to take a percentage of your time.

I don’t know what that percentage is, and I’m not sure that anyone ever does when they make the decision. From experience I know that it’s usually higher than you initially think it’s going to be. You are committing yourself to not only designing everything, but evangelising it, managing it, troubleshooting it and just generally building a competency in-house.

A lot of companies already have experience with running monitoring systems themselves. Prometheus is going to provide better visibility into modern infrastructure, but it’s not going to magically remove the work of running your own system. You’ll need to evaluate if your experiences to date make this an attractive proposition or not.

With Prometheus, if I was going to deploy it at any kind of scale, I’d bring in an expert. Just because Prometheus is a single Go binary for the server doesn’t mean you don’t need to think about things and architect them properly. You’re going to want to have a strategy for deployment, labels, service discovery, HA, federation, alerting and a bunch of other stuff. This is on top of deciding what metrics to actually collect and instrumenting your own applications.

Now, some of the above is true of SaaS products too, although they often automate much of this and also provide onboarding help to get set up.

Secondly, you have the issue of adoption. You can lead a horse to water but you can’t make it drink!

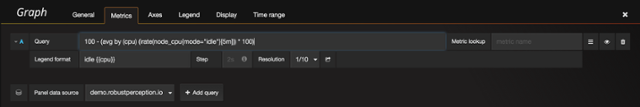

You’re going to need to get buy-in from a bunch of teams and characters. There is a learning curve to Prometheus and it requires a bit of reading which makes it a much harder sell. Grafana assumes you have done this reading and know how to write queries.

As a seasoned monitoring person, I’m pretty happy with constructing queries like that, but that’s a very steep learning curve for someone who may never have seen this stuff before.

This is in contrast to most SaaS tools that typically provide an easy-to-use interface that guides even the most hardened non-document-readers through. The challenge is around friction, convincing people to invest time, and ultimately in getting people to use the monitoring. Make it too hard, and now you have a rogue Graphite spring up, or perhaps an InfluxDB server. Sooner or later you’re talking about a consolidation project of shadow implementations all over the org.

Other Considerations

Let’s say you have an awesome bunch of technical people who you’re sure would all get on board with adopting and running Prometheus. You’re happy with the time investment needed. Is there anything else you should think about?

Clearly it’s not ideal to have silos of operational data sitting on servers dotted around your environments, where each server is only going to hold a few weeks worth at any one time. Managing long term storage is another can of worms and further adds to the management overhead, especially if you go the route of OpenTSDB. The ideal solution would be to send the data somewhere central that scales and persists for a reasonable length of time so that you can do analytics easily across all of your services at a decent resolution.

Similarly, it’s a common goal to start opening up all kinds of data to other teams—and perhaps some of them aren’t very technical.

You also might want some company-wide visibility. It’s pretty common nowadays to have a central platforms team that helps oversee the automation that deploys code into production in a standard and secure way. This team would benefit from keeping an eye on the state of monitoring throughout the development teams so they can jump in and offer some training or assistance.

Surely the goal should be to have everything in one place and easily accessible to all and you shouldn’t have to spend your time worrying and planning about manually sharding and constantly reconfiguring stuff.

Not only Prometheus

Prometheus is going to provide some very rich metrics with its exporters within the first few hours. Over the next few weeks you’ll start to instrument your own applications and you’ll quickly end up with access to all of this data in Grafana which can be mined with a rich query language. You’ll set up some alert managers and start to craft some actionable rules.

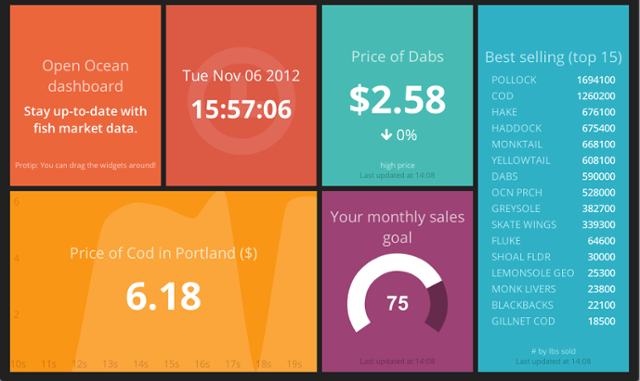

On top of this you’ll probably want to display some business metrics on a TV screen. Somebody will undoubtedly set up and configure Dashing for that.

The only problem with Dashing is you need a developer to create and update it. I’m sure the business folk would love to create their own dashboards, too.

As an Ops person I’m very used to writing check scripts that collect a bit of data, perform some logic, and then output an up and down status. In a large company it’s quite unlikely that everything will be containerised so these types of checks will still be valuable. I’m pretty sure I’d miss something like Nagios that runs check scripts and I’d get frustrated at needing to write an exporter with that logic inside when what I really want to do is just throw together a quick bash script and have it exit 0 or 2.

This dude in the Hangops #monitoringlove Slack channel said it more eloquently than I ever have.

joelesalas [12:32 AM]

@jgoldschrafe again, you want check-based monitoring and metric-based monitoring

to fully cover the problem space

So now you’ve got custom dashboards outside of Grafana, and probably Nagios has crept back in again. You want to start getting more people involved and using the system so you start to build front ends to make it more accessible, especially for the alerts. There’s talk of creating a Wiki with all of the links and setting up single sign-on between everything. You’d also like more integrations or to fix up the ones that already exist in GitHub repos with fewer than 20 commits. You’d like to start building some reports but realise there isn’t a single API endpoint to hit, so that also needs to be worked on.

Guess what? You’re now building the equivalent of a SaaS monitoring tool. Except the time and resources you are able to dedicate to it are nowhere near what a SaaS company already has. I can also say with a high degree of certainty, given how many times I’ve seen this happen, that whatever you build will eventually just get thrown away. The next guy will come in and rip it out in favour of his preferences.

The Answer?

As mentioned at the beginning there really isn’t a definitive answer to the question of build vs buy. You’re going to have to make the choice of whether to spend time or money.

The real question is: how valuable is monitoring to your organisation? Given the fact that monitoring has a direct impact on the quality of your online service and efficiency of your developers I’d say that what most companies it is highly valuable.

Do you want to buy your way to the end now and reap the benefits of not having to worry about managing a monitoring platform, rather than build stuff that makes it more accessible instantly but presents problems down the road? Many companies choose to buy, which is why our segment of the market is exploding right now.

The elephant in the room is SaaS pricing. That’s a topic for another blog post, but I’ll tell you it’s a serious consideration at Outlyer. We’re constantly looking at our prices to make sure pricing is never an issue and that decisions are always based on customer time, cost, and value calculations.

Outlyer and Prometheus

One differentiator that Outlyer has over all of our SaaS competition is that we support open source exposition formats like Prometheus. So if you do go down the build route, there isn’t much friction to moving into our platform later on. Instead of running the single Go binary server component you’d simply use the Outlyer agent to scrape your endpoints instead. Or, if you’d like to keep local Prometheus servers we can scrape the federation endpoints.

Hopefully, this has been useful to those thinking about what to do when moving into the cloud. In my career, I have made this decision many times and even today, the result may be different depending on context. My personal belief is that, like with infrastructure, the trend will be towards buy over time. Running a monitoring system should not be a core competency for every company in the world. That time would be better spent on product.