I’ve always found it strange that Java is the most popular programming language on the planet yet actually getting stats out is extremely frustrating. I’ll go through the ways I’ve tried, from worst to best.

Nagios Scripts

There are many check scripts available on the internet and sadly they all work the same way. To collect the metrics they need to talk to the JMX interface of the application server. That in itself isn’t too bad, but it does require a bit of fiddling usually to enable JMX. The bad bit is that to talk to the JMX interface you need more Java. For check scripts that poll every 30 seconds this means spinning up an entirely new Java process each time you want to grab some data.

The main plugin I’ve used is check_jmx. And, although it works, it’s probably one of the less friendly ways to get metrics. You need to specify each individual metric you want to collect manually and run multiple commands. So that’s multiple:

check script -> java -jar jmxquery.jar -> jmx

There are other scripts around that use friendlier Java clients like cmdline-jmxclient. This makes the check creation slightly easier but doesn’t really solve the problem of not starting Java, which was designed for long running processes, multiple times for seconds at a time. With all of the startup overhead that entails.

Unless you have massive servers, very few metrics to collect and enjoy browsing jconsole in one window while hand crafting check script arguments in another I’d probably steer clear of any of these scripts.

Update: festive_mongoose on Reddit suggested enabling SNMP, something that I’ve never seen done or tried. But it seems like a reasonably sane approach. Here’s a blog describing how:

https://www.badllama.com/content/monitor-java-snmp

I’m not a massive fan of SNMP after writing a bunch of scripts to monitor Cisco devices. So my recommendation is still to go with the easier options in the next sections. Although that is just a personal peeve so you may want to give it a try.

JMX-HTTP Bridge

This is probably the entry point for JVM monitoring. Wouldn’t it be cool if your JVM started up and presented a rest interface that was easy to browse and query like a lot of more modern software does for their metrics? Well, it doesn’t by default but you can make it.

The two things I’ve tried are Jolokia and mx4j. Both worked well, but I prefer Jolokia so we’ll discuss that one.

To get Jolokia starting along with your JVM just pass in a link to the Jolokia Jar as part of your JAVA_OPTS.

-javaagent:/path/to/jolokia-jvm-<version>-agent.jar

That’s pretty much it. You now have a rest interface to JMX that you can query on /jolokia. At this point you can write simple bash scripts to curl for metrics, or if you want to do something a bit more complicated I tend to write Python scripts using the awesome Requests library.

As far as I can tell hitting up the rest interface has negligible affects on performance. Certainly, if you are just pulling out the common stuff like memory (heap, non heap and garbage collect) then it’s a good way to do it.

JMX to Graphite

Polling for metrics via a rest endpoint is good if you’re just checking you haven’t blown your memory or aren’t constantly garbage collecting every 30 seconds. But what if you want to stream real-time metrics out of the JVM into graphs? Other software exists that makes this possible by holding open a long running connection to the JMX port and using it to make more rapid requests for metrics.

The main piece of software I’ve used for this is JMXTrans. On Ubuntu it’s as simple as installing the package and putting some JSON config files into a directory. Some more detailed setup instructions can be found here:

https://github.com/outlyerapp/java-metrics

Although it’s easy to setup JMXTrans I think the JSON config files are pretty horrible and quite hard to work with. Also, guiding people through how to set it up is a bit of a pain as invariably they need to spend a bit of time getting it working on a single box, then writing config management to roll it out everywhere. It isn’t exactly plug and play and is prone to errors that are hard to debug.

I believe you can also use CollectD with the Java plugin to construct scripts that can also poll the JMX API more frequently without incurring the Java startup penalties. However, you have to stop somewhere and I’ve not had time to try it yet.

JMX Profiling

More recently I started playing around with Java monitoring again and found the awesome StatsD JVM Profiler from Etsy:

https://github.com/etsy/statsd-jvm-profiler

Again, it’s another case of adding a javaagent option when you start the JVM. But that’s it, there are a few options you can pass in, but no horrible config files!

For my first set of testing I decided to victimise our Jenkins server. It’s probably the wrong way to do it, but I just hacked in the following into /etc/init.d/jenkins

STATSD_ARGS="-javaagent:/usr/lib/statsd-jvm-profiler-0.8.2.jar=\

server=fingerprint.statsd.outlyer.com,port=8125,profilers=MemoryProfiler"

Then modified the line in the do_start() init function to load it.

$SU -l $JENKINS_USER --shell=/bin/bash -c \

"$DAEMON $DAEMON_ARGS -- $JAVA $JAVA_ARGS $STATSD_ARGS -jar $JENKINS_WAR $JENKINS_ARGS" \

|| return 2

The Jenkins guys are probably turning in their grave at this point. But it was a quick test I thought I could revert very quickly if the metrics were rubbish.

After spending those 2 minutes wantonly breaking config management in the quest for monitoring satisfaction I got a massive surprise. It just worked, and not only that the metrics sent back are awesome.

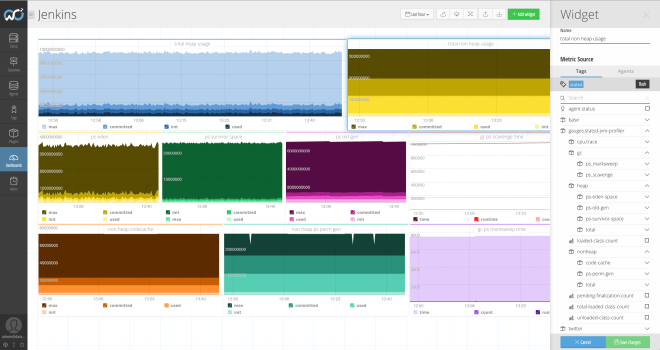

Here’s a quick dashboard I knocked up and the list of some of the metrics available in the right hand metrics sidebar. You will probably need to click the image to expand it to see those.

With this alongside Jolokia, a few dashboards and some alert rules you’d have quite good monitoring coverage.

I haven’t done much with the CPU metrics simply because we don’t have a cool enough widget to display them yet. The blog post that piqued my interest about this project shows a flame chart being used for those. We could probably add a widget for that at some point.

That blog also talks about scaling problems so you may want to be careful with how many metrics you send back, unless you’re using outlyer, in which case we don’t mind :)

Prometheus JMX Exporter

You can think of the Prometheus exporter as a kind of external Jolokia. Its purpose is to connect to your Java application and expose a set of metrics over a HTTP interface. If you are using Prometheus, or have a monitoring system (like Outlyer) that can scrape the metric format then this is a very nice solution. You can start a small Go binary on each of your Java servers (or run a Docker container) with a tiny bit of configuration and simply poll the /metrics endpoint.

Summary

I would personally avoid the Nagios check scripts that connect to JMX via a jar file.

You should setup Joloka regardless as this gives you a very simple way to collect any metric by polling the rest interface. Create or use Nagios check scripts that hit the /jolokia endpoint.

If you want real-time generic metrics then the StatsD JVM Profiler is the way to go. For a line or two of config you get some amazing results.

If you really do need real-time custom metrics from your JVM then I believe JMXTrans is a workable piece of software. It’s just really horrible to setup the JSON files. Luckily, with the two options above you probably don’t need this.

For applications that you are building yourself in Java you should definitely setup a StatsD client in your code. You can then send custom metrics from your application as it runs in production. You may also want to investigate DropWizard.

The final piece is probably some kind of APM tool. Both New Relic and App Dynamics will do a good job of monitoring the performance of your application.

Update: September 2016

Nagios, Graphite and StatsD are starting to show their age. If you have a green field project I would seriously consider switching to Prometheus. You can then use the Host Exporter, JMX Exporter and Prometheus client libraries to get a lot more visibility into the entire stack without a massive amount of setup time.

Some benefits to using Outlyer

We recommend that you install Jolokia on every JVM and tag the Outlyer agent on those servers with something like Jolokia or Java, or whatever tag you can use to differentiate those servers from the others.

Once you have them tagged you can immediately start to construct scripts in the browser to collect metrics in Nagios format. These will be polled every 30 seconds and will be immediately available to use in Dashboards and Alerts. With the benefit that you can create different views on the same data by combining tags for your environments and services.

The other major benefit is you can go from deciding what additional metric to grab from Jolokia to being able to graph and alert on it in seconds. Once you have Jolokia and the Outlyer agent on a server you never need to do anything other than create and edit scripts in the browser. You have a platform where everything is available and a central place where you can edit what to collect in real-time.

To get the Etsy JVM Profiler working you don’t need to setup anything other than the –javaagent on the server you wish to profile. Simply send the StatsD traffic into the Docker container in your account.

-javaagent:/usr/lib/statsd-jvm-profiler-0.8.2.jar=\

server=fingerprint.statsd.outlyer.com,port=8125

In the above case I downloaded the jar file into /usr/lib/ and then set the fingerprint my ‘outlyer’ container address. Some more details can be found here:

https://support.outlyer.com/hc/en-gb/articles/204924459-Hosted-StatsD

So to confirm, the only real effort you guys need to go to is putting a couple of jar files into directories and adding a couple of —javaagent options to your JVM’s. One so we can poll from Nagios scripts in Outlyer, and one so the JVM emits StatsD metrics directly to us. This should all only take a few minutes to setup and we’re available on Slack to help as always.

Update: September 2016

It’s really simple to start monitoring Java applications in Outlyer using our Prometheus plugins. Simply install a Outlyer agent on your Java server, start a Prometheus JMX exporter and use the Outlyer agent to scrape the metrics. You can also scrape metrics endpoints in your application if you instrument using a Prometheus client library.