The Dream

Create a monitoring system that dev and ops can easily setup without intimate knowledge of a monitoring product, supportive of organisational shifts to micro services where people who may want to add a monitoring check might not even know what Nagios is. Agents should pop up and collect config automatically, and deregister gracefully when environments shutdown. The agent should run Nagios scripts written in any language. We’re aiming for dropbox level of simplicity!

Well that was the plan anyway. We’re doing internal monitoring of operating systems and applications that live on them so we need an agent, and we have some strong ideas about how it all should work. In a parallel universe the Shinken team will have probably created their own NRPE agent in Python which I could have taken, modified and just written the server side, but alas we’re in a universe without that.

So first of all why didn’t we just use or modify NRPE? It’s been around long enough for all of the edge case problems to be fixed etc. I personally fancy doing more C development but for this task it just seemed like too much work. So we went with a cool dynamic language like the startup hipsters we are. Besides the language there’s a list of some other problems we had with NRPE which I’d like to frame within the context of our problem domain of providing a SaaS service. If you’re running traditional IT with reasonably static environments in your own data centers and aren’t even thinking about organising your development teams around features or sharing tools between ops and dev then these problems probably don’t apply. You probably don’t want a cloud monitoring product at all, but nevertheless you might find some of this interesting anyway.

The NRPE problems

- requires a port to be opened into your infrastructure

- auth is an allowed hosts file

- recent issues with SSL

- only responds to a centralised task scheduler

- can be a bit flaky sometimes

- requires a bunch of config (often done via puppet / chef)

- hostname and ip oriented in terms of identification

- written in C so not particularly nice to work on (my opinion only!)

- Basically, not very suitable for a cloud based monitoring system. Good enough for ops teams who can mitigate a lot of the above via configuration management and network layer security. Not very cool for our target market who are cloud services companies.

What we chose to build

Unfortunately this blog is going to look a bit like a series of bullet points. Mainly because I wrote the source material for the blog and then looked at the sheer volume of information and winced at trying to get it into a coherent volume of text. My co-founder David said to put all of the bullet points into Slides and embed, but as I always know best I’ve decided to add more paragraphs around the bullets and put some pictures in and hope nobody notices.

Here’s a quick list of what we wanted our own monitoring agent to do.

- an agent that connects outgoing only

- securely registers and de-registers

- require absolutely minimal config (ideally none)

- has its own internal task scheduler

- should pull its configuration from a central place (including plugins)

- use fingerprint identification (instead of ip / hostname)

- support bidirectional communication

- control other collectors and keep them alive (like collectd, diamond, fluentd etc)

- run out of the box plugins across any operating system without additional dependencies

- should be non-blocking so bad scripts don’t kill everything

- should start automatically and stay running

- should buffer and send metrics so data isn’t lost when the connection goes down

First attempt

Our first attempt was possibly a little naive. On the surface the agent problem looks pretty easy. I managed to knock up a prototype in a weekend and had it sort of working. Job done and move on? If only. Here’s the basic route we took with our first attempt.

- python agent (2.7)

- apscheduler for the internal task scheduler

- 10 seconds job to poll the server for new config / plugins

- persistent amqp connection for metric sending and rpc (bidirectional communication)

- Bitrock installer that installs dependencies on boxes (python and libraries)

Failures

We learnt a fair bit from our first agent. The most important lesson we learn’t is that we grossly underestimated how hard it would be to create a piece of software that’s both bulletproof and runs on every platform (and that does the crazy stuff we want it to do). Our most obvious pain points are listed below, I’m pretty sure we had more but I just can’t remember every time I cried.

- amqp works well for static connections but not for thousands of machines coming and going (we saw similar issues with Sensu but on a smaller scale - unfortunately they got far worse at large scale)

- occasional rpc disconnects and edge cases prevented reconnect (rpc is remote procedure call - it’s the bit that lets users run checks instantly by pressing ‘run’ in our app)

- exposing rabbit over the internet wasn’t the best idea for security

- we managed to kill our rabbit servers in almost every way imaginable, it became clear if we could do it without breaking a sweat it would be a serious vulnerability in future

- threads + async + rabbitmq leads to hell, I won’t say any more on the subject other than I’m still getting over the trauma of unclosed connections and rabbit dieing due to memory thresholds blowing

- the sheer volume of subtle differences between linux systems adds major complexity to the installer

- presence detection (getting instantly notified in the browser when agents come and go) required a special erlang rabbitmw plugin binary and nobody knew erlang.. it did however always work though which was cool

- windows doesn’t have a shebang, every plugin got executed like it was a .cmd / .bat or .exe

- rolling out the agent is hard to arrange on customers boxes so we needed to get to a v1 that didn’t require constant updates

- not enough operating system test coverage, we kept getting surprised by the subtleties in different distros

- feedback on the bitrock installer was it felt cumbersome

Successes

- rpc is awesome and leads to amazingly quick script iteration

- pyinstaller is great for freezing the agent so it runs on every platform

- apscheduler works really nicely as an internal cron

- fetching the config via the rest API and doing a dictionary diff of the json works really well

- the curl installation for single linux boxes is quick and user friendly (yes, I know many people hate this)

- windows actually always works which is surprising given we’ve not put any special logic into the agent for it

Second attempt

The main issue with our first proper agent was that it wasn’t 100% reliable across every platform. It’s immediately obvious in our monitoring system right now when an agent has broken because it sends us an email. Our goal is to get to zero false alarms and the rabbitmq stuff was just too unreliable when deployed to a few hundred boxes. Nobody wants to waste their time diagnosing failed components of a monitoring system so our agent needs to be the bit that never breaks. After over a month of pain with a direct AMQP connection to rabbitmq and significant effort to make it all work we relented. Over a pint at the pub we discussed other mechanisms ranging from plain old sockets all the way up to silly ideas like using jabber. In the end we settled on using a websocket connection. We’d move rabbitmq into the middle of our architecture because our app uses it as a communications bus, but add a tier in front of it that acted as a websocket to amqp proxy. Our backend is all nodejs and there are abundant libraries for doing this so the server side was done in a couple of days. The agent work took a bit longer but it has been like night and day since in terms of agent issues. Here’s a list of the bits we did for the second agent.

- python agent (2.7)

- apscheduler for internal task scheduler

- 10 second job to pull config / plugins

- web socket connection to replace the troublesome amqp

- bundle out of the box script dependencies into the agent and execute in-process (but in a separate thread so they don’t destroy the agent)

- add shells for windows

- create an agent collector so that agents connect to via websocket and write the messages onto our internal rabbitmq

- Metrics write to an internal bus and a single thread then writes them back via web socket

- automatically test on every OS (well, every image Rackspace provides anyway), 24 different operating systems in total

Failures

- we had to switch the web socket library a couple of times before we were happy

- the initial autobahn websocket library depends on twisted but this would have meant a complete rewrite to integrate it properly so we pulled that out again

- we settled on websocket-client which is much simpler

- still don’t work on every single platform automatically via the standard curl installer (arch, gentoo, freebsd and some OS’s that have stuff like upstart don’t work out of the box with the curl installer)

Successes

- Everything stays connected and plugin execution always works!

- Moving to web sockets completely solved the issues we had with rabbitmq

- In-process execution solved the issue of installer complexity and out of the box scripts always working

Third attempt

We haven’t actually started this and to be honest after months of iteration and testing we’re pretty happy with our current agent. Although, things can always be improved. We’re already thinking about..

Everything good from the 2nd version plus make the agent update itself automatically like dropbox does (via stub loader + rsyncing libraries). Possibly go fully twisted in the process. We’re not starting this for at least a few months.. we need time to recover from the intense pain we have experienced getting to an agent that works properly.

Other issues we’ve had along the way

The linux directory structure

After a lot of iteration we put our fingerprint file into /etc/outlyer/agent.finger (this generated key is what identifies the box). It then took an obscene amount of time to circle in on putting everything else into /opt/outlyer. This is literally the only location that works everywhere (yes smartos hypervisor I’m looking at you!). Obviously logs go into /var/log/outlyer.

Gentoo!

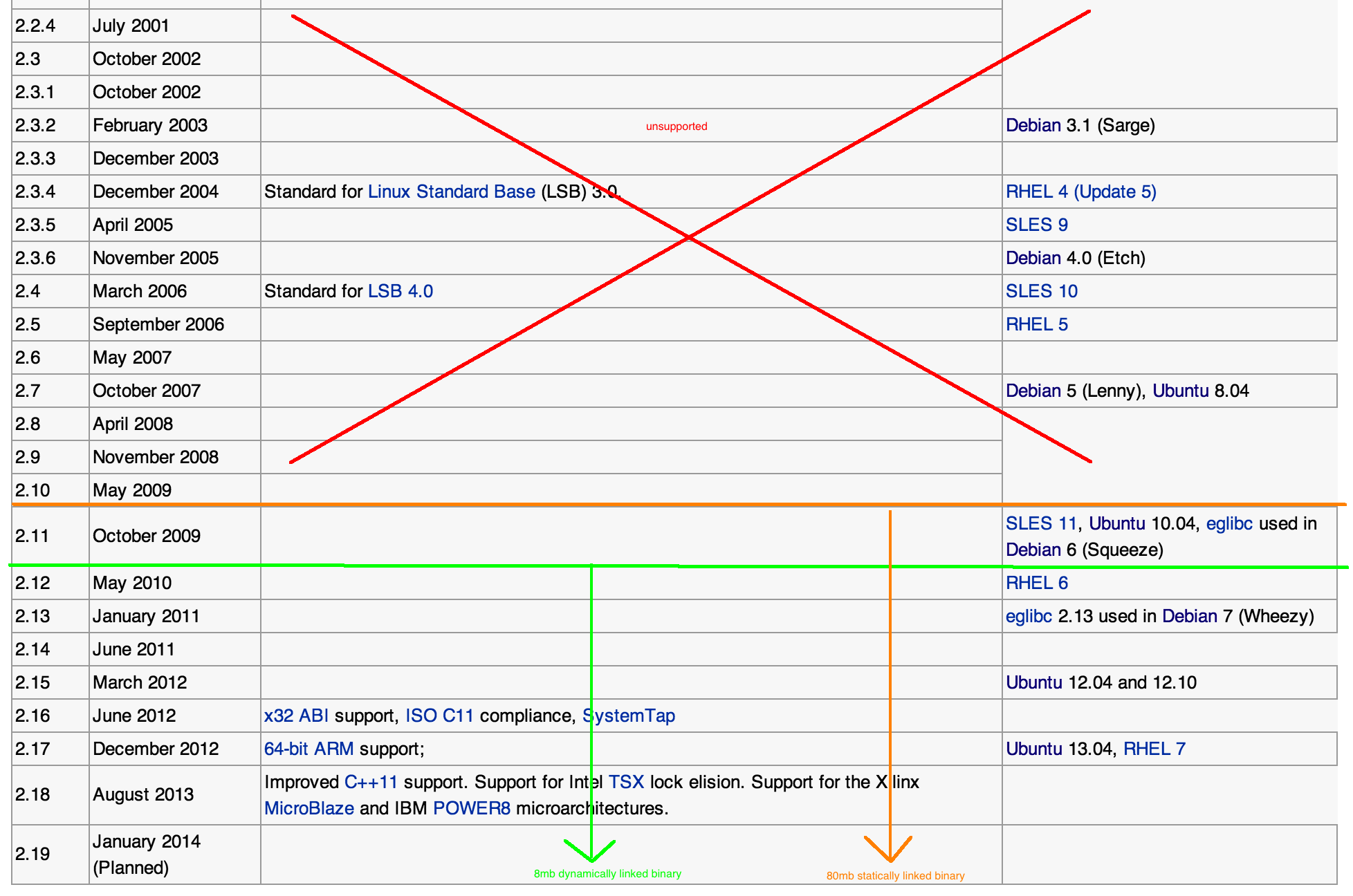

We put a stake in the sand and said we’d only support linux distros from Centos 6 onwards. Then I walk onto a customer site and they have Gentoo running from 2009. Luckily we’re using a Python agent so doing a custom build using pyinstaller only takes about an hour to setup (I had to get python 2.7 installed as an alternative before we can freeze).

I was so convinced that we had a supported list of platforms I even created a picture to highlight our stance. Three days later I was doing a bespoke compile for a potential customer that made the picture irrelevant.

Agent getting killed by OOM Killer

Still working through this one. Was caused by thousands of apache2 processes using 100k of memory. Basically the server needs fixing - we did alert to say the agent had died though obviously.

High CPU blocking agent

Not solved, this will get solved by handlers. This is a virtual centre server that is constantly running at 99% cpu and probably needs a bigger box. The idea of the handlers is that we’d abstract alarms from emails and put some logic in the middle that lets you tune stuff. So for this server we’d put it into a rule that means it has to be down for more than X minutes before we alert.

DNS issues

We solved this by adding additional logic around name resolution. Most monitoring systems don’t actually handle DNS being broken very well. Usually you’d lose complete monitoring visibility. We check DNS using the hosts usual mechanism, then last IP for data loop, then cycle through a list of IP’s we got from our central server on the last successful connect, then repeat until it works. Then we alert that DNS is broken via a dns check script. It’s far nicer getting a bunch of DNS broken alerts than Agent Down as they are more relevant.

Network flakiness

Not solved, but will get solved by handlers. Who uses virtualbox in production!!!

Automating the Windows build! Aaargh

I installed Freesshd and msysgit, pointed the default shell at bash and now use fabric like on the Linux hosts to trigger an automatic build on git checkin. On one hand I hate Microsoft for how hard they make automating tasks across boxes, but on the other hand I’ve seen first hand how difficult it is to make software run in uncontrolled environments. So there is a bit of respect there :)

Random Python memory stuff

I don’t even remember the exact problems we had any more. All I can remember was it being about 3am in the morning, I’m pretty sure I’d had a few beers and things were going really badly. So I was playing with a bunch of software that would inspect python executables and draw out colourful maps. I’m pretty sure none of this helped and the problem was found using something completely different.

Now we have a stable agent working in production for customers we’re pretty happy. We are making a few changes to it to let it start and monitor other collectors like CollectD, Diamond and FluentD. From past experience I know that CollectD doesn’t always stay running and has been known to kill a few of my boxes. We’re also trying to simplify the configuration of those other collectors by integrating their config file creation into our app (again making it easy for people who don’t have a clue about what things like CollectD even are to expose their benefits).

In summary

Creating an agent has probably been one of the hardest things we’ve had to do. Our backend metrics routing, riak time series development and a whole lot of other work hasn’t matched the hardship of getting a monitoring agent to always work reliably on customers boxes.

Luckily, after several months of work, we’re hopefully past all of that. Our agent is deployed to several hundred boxes and has been stable for a few weeks allowing us to get on with the advanced alert handlers, graphite port and dashboards.